Performance Blog: Content Delivery Networks (CDN) Explained

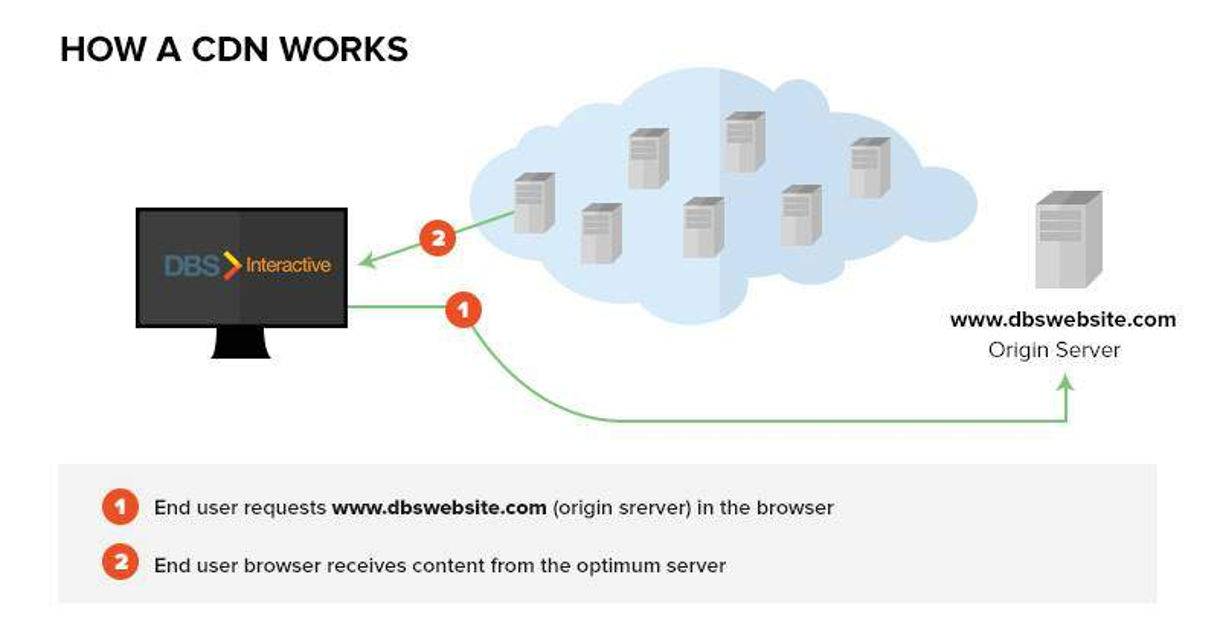

A Content Delivery Network (CDN) is a globally distributed network of web servers whose purpose it is to provide faster delivery, and highly available content. The content is replicated throughout the CDN so it exists in many places all at once.

Some of the big names in this market are Akamai, Amazon Cloudfront, and Edgecast.

Why do CDN's exist? Bottom line ... better user experience. And as a secondary benefit, there is more efficient network resource utilization.

CDN's do what they do primarily with two techniques:

- Keep important content distributed to multiple, globally distrbuted data centers so it is closer to end users, and thus faster to download.

- Use server optimizations based on content type to get that content to the user most efficiently.

First let's take a look at how web servers in general deliver content to a user's browser.

- A user makes a request to load a web "page", often by clicking a link.

- The user's web browser sends a request to the web server for that content.

- The web server starts sending back whatever content that makes up the requested web "page", which typically contains many separate resources.

Pretty simple stuff. Traditionally, the same web server is asked to deliver any content related to that request. And to all requesters, regardless of location or type of content. Really big sites might have a load balanced web server "pool", but again traditionally, these would be in the same data center.

But under the covers, there is a lot more going on. There is a DNS lookup to get the ip address of the web server. The web server will typically be sending a lot of mixed content types back to the browser: images, JavaScript, CSS, text, videos, and what have you. A typical web page will have many such resources embedded in it that will be sent to the browser -- often more than 60 separate files to be fetched, and sometimes more than 100 per request! Some of those require additional actions by the browser in order to render the content.

We can break these content types down into several distinct categories:

- Dynamic content: this content is generated on the fly by the web server using any of several common web programming languages such as php, ruby or java.

- Static content: this is content that typically does not change very often and does not require generation. Images, CSS, and JavaScript fall in this category.

- Streaming content: videos or audio files that are played via a web browser control.

These are all different enough that any one web server is not going to do a very good job of trying to serve all of them efficiently. They each have their own demands and optimizations. Dynamic content requires a lot of memory and CPU from the web server, but uses very little bandwidth. Static and streaming content on the other hand, are the reverse -- they require relatively little memory but use a lot of bandwidth. Trying to use the same web server to handle both scenarios can result in the worst of both worlds: the web server hangs on to the memory it needs for the dynamic content, and will also try to reserve the resources it needs for the non-dynamic content. Both cannot be done at the same time very efficiently, at least not from the same server.

The other problem traditionally is that all content is in one location. If our server is in Chicago, people in the mid-western US will likely get pretty good response times from that server and have a better user experience than those in Hong Kong, Germany, South Africa, or Florida. Those users are much further away, and due to the laws of physics, the content will just take longer to the get there, maybe significantly so. This is compounded by the number of requests, most of which will be of our "static" content type, the high bandwidth type.

How long it takes for individual bytes of data to travel from the server at the point of origin to the end user is known as "round trip time", or "latency" (measured in milliseconds). When we want "fast" web content, one of the things we want is as low of latency as possible. Milliseconds may not sound like a big deal but when you realize a typical web page may be 1 megabyte (a million bytes) or more, and composed of many separate files, those milliseconds start to mount up.

The latency factor is even a bigger concern for mobile content providers. Cell networks by their nature have much higher latency than land based networks. Just because 4G is advertised with great bandwidth, does not mean it has great latency (which are two very different network metrics). It doesn't.

To illustrate the differences, I "pinged" one of DBS's web servers from 3 different devices and got these results:

- DBS office LAN on a high speed business class 10Mbs network: 12 milliseconds (best)

- Home system on AT&T U-verse 18Mbs network: 69 milliseconds

- My Android 4G smartphone (Verizon): 180 milliseconds (worst)

Bandwidth has nothing to do with latency!

That's a 15x difference from best case to worst case, all on what most of us would consider better-than-the-average-user type connections. 3G networks have even worse latency, getting as slow as 400 milliseconds typical round trip times. Cell networks are also more prone to packet loss (another network metric), which can radically hurt performance.

Mobile content delivery requires a much more aggressive optimization strategy if it is to approach desktop responsiveness.

So perhaps you are thinking does a second or two or three really make much of a difference? There have been a number of studies from Yahoo, Google and others that show that as much as a 10% drop in conversion rates per each additional second of page load time (see this report for one example). Once again proving that time is money!

CDN's were developed to help solve both the above mentioned issues. Latency is greatly reduced, and content is delivered in a more optimal fashion.

CDN's are not new, they have been around since the dial-up days and have long been in use by the really big players like Yahoo and YouTube. But they are now getting more readily available to where the costs are such that they are within reach of lesser players as well.

Is a CDN right for every situation? No, in fact, for many it is overkill. A small local organization with a local audience would get very little benefit. Costs would outweigh what gains there might be.

So who should consider using a CDN:

- Content providers with a national audience, or aspiring to a national , or international, audience.

- Higher bandwidth content providers

- Mobile content providers

CDN's are not panaceas. They cannot significantly speed up sites that have fundamental issues related to the primary hosting environment or application configuration. They are best utilized when all other potential performance issues have been addressed.

What about costs?

Most of the cost is in setting up and managing this type of hosting environment. There is additional complexity which translates into potentially more time spent configuring and managing resources.